Crawlable

What does it mean to be crawlable?

Seo main factors

Crawlable Pages

In search results, every search engine can exclusively display pages that are not blocked from indexing and that can allow the crawlers to scan them.

If you do not want to show specific pages in search results, you need to block their indexing.

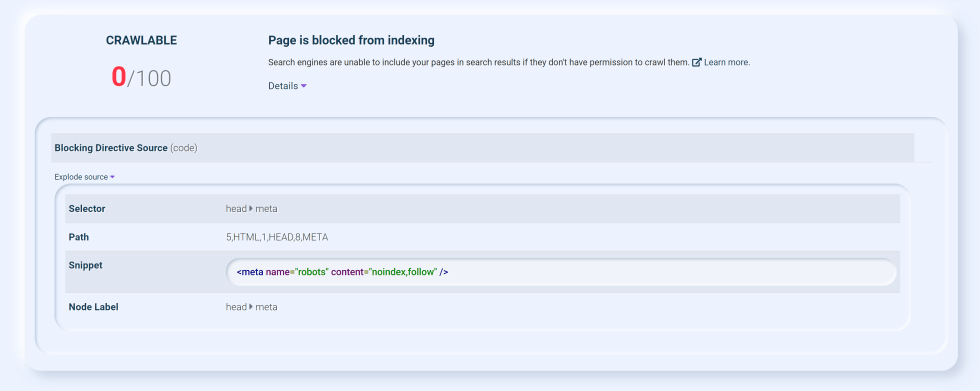

How the SeoChecker indexing audit fails

A situation in which the indexing audit is invalidated by SeoChecker:

During an audit, SeoChecker will check the page elements and headers that stop all the crawlers from indexing.

For instance:

<meta name="robots" content="noindex"/>

or:

X-Robots-Tag: noindex

In case you do not need to stop every crawler but only some specific, be aware that SeoChecker will not check them; we recommend you anyway to be careful, because hiding a page can be a penalty for your website.

<meta name="AdsBot-Google" content="noindex"/>

How to be sure that your page is rightly crawled by search engines

Firstly, you have to decide which pages you want to index and which you do not.

Once you have established that, for the pages you want to be indexed you need to remove anything that is preventing crawlers to do their job, such as HTTP headers or <meta> elements.

- in case you create a HTTP response header, be sure to remove the X-Robots-Tag:

X-Robots-Tag: noindex

- if the meta tag in the following example is in the head of the page, be sure to remove it:

<meta name="robots" content="noindex">

- if in the head of the page there are meta tags that stop particular crawlers, be sure to remove them:

<meta name="Googlebot" content="noindex">