robots.txt

Why robots.txt is not valid

Seo content

Why robots.txt is not valid

What is a robots.txt?

A robots.txt file is a particular type of file capable of communicating with search engines: specifically, it informs search engines which pages of your website they can crawl.

During the configuration of your robots.txt, it's possible that this results invalid, causing two kinds of problems:

- one is about the impossibility for the search engines to crawl your public pages, due to the fact that the robots.txt itself prevents it. This issue involves that your pages will be shown less times in search results.

- the other one is the opposite: indeed, it can happen that search engines will crawl pages of your website that you did not mean to show in search results.

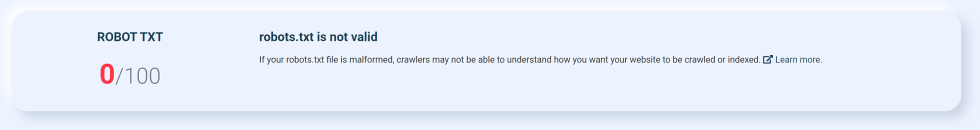

How the SeoChecker robots.txt audit is displayed

A situation in which robots.txt files are invalidated by SeoChecker:

What are the most usual mistakes in a robots.txt file?

- No user-agent specified

- Pattern should either be empty, start with "/" or "*"

- Unknown directive

- Invalid sitemap URL

- $ should only be used at the end of the pattern

How can I be sure that robots.txt is working rightly?

You have to make sure that robots.txt file is in the root of your domain or subdomain.

N.B. SeoChecker will not examine if your robots.txt file is located in the correct place.

How can I resolve any issue with robots.txt file?

1. Avoid HTTP 5XX status code

First and most important thing you have to consider is that the robots.txt file must not return an HTTP 5XX status code.

This error does not allow the search engines to understand which pages you want to be crawled, bringing them to stop trying to crawl your website and preventing your new contents to be indexed.

2. robots.txt file should not be larger than 500 KiB

You need to keep the robots.txt file smaller than 500 KiB, in order to avoid that search engines can stop processing halfway.

How can I keep this dimension?

Do not focus on excluding single pages, but concentrate yourself on similar groups: for instance, if you need to disallow the crawling of the PDF files, do it to all the specific URLs of PDF category, instead of one by one.

disallow: /*.pdf

3. Focus on format errors

- In a robots.txt file not all the formats are accepted: you need to use exclusively comments, directives and empty lines under the “name:value” format.

- allow and disallow values need to be empty or begin with / or *.

- Writing a value, do not put $ in the middle of it.

4. user-agent must have a value

Search engine crawlers follow the instructions of user-agent names, therefore to guide them correctly you have to give to all of the user-agent a value; to designate a specific search engine crawler you should choose a user-agent name from its published list.

In order to correctly match all unmatched crawlers, you are supposed to use *:

NO

user-agent:

disallow: /downloads/

No user agent is defined.

YES

user-agent: *

disallow: /downloads/

user-agent: magicsearchbot

disallow: /uploads/

A general user agent and a magicsearchbot user agent are defined.

5. Before user-agent, do not write allow or disallow directives

The robots.txt file's sections are defined by user-agent names, that give to the search engine crawlers the instructions to follow; therefore, if you do not put an instruction after the first user-agent name, the crawlers can not follow it.

Furthermore, crawlers prefer to follow a user-agent name more specific; thus, between user-agent: * and user-agent: Googlebot-Image, the second one will be followed at the expense of the other one.

NO

# start of file

disallow: /downloads/

user-agent: magicsearchbot

allow: /

No search engine crawler will read the disallow: /downloads directive.

YES

# start of file

user-agent: *

disallow: /downloads/

All search engines are disallowed from crawling the /downloads folder.

6. sitemap must be provided with an absolute URL

In order to let search engines know better about your website pages, you should provide them a sitemap file. It usually contains an updated list of all the URLs that are in your website, with the informations about the last changes.

Ensure that you use an absolute URL in case you want to submit a sitemap file in robots.txt.

NO

sitemap: /sitemap-file.xml

YES

sitemap: https://example.com/sitemap-file.xml